-

1904: Two-electrode vacuum tube is invented

Thomas Alva Edison, the inventor of the first practical incandescent light bulb, had also noticed that the direct electric current flowed from a heated metal filament in the bulb to the other electrode only when the latter had a positive voltage. John Ambrose Fleming used this effect (known as the Edison effect) to invent the two-electrode vacuum tube rectifier, which was soon to play an important role in the electrical circuits.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1946: World's first general-purpose computer ENIAC is announced

ENIAC was the largest electronic machine ever made, equipped with some 18,000 vacuum tubes and weighing about 30 tons. It occupied a 160-square-meter room.

The computer consisted of a total of about 110,000 electronic circuit devices.

In contrast, today's microprocessor typically integrates tens of millions of transistors and yet is smaller than the palm of your hand. This astonishing decrease in size started when solid state semiconductors replaced vacuum tubes.

-

1948: Junction-type transistor is invented

Toward the end of 1947, John Bardeen and Walter Brattain at AT&T Bell Labs developed the point contact transistor, which was the first transistor ever constructed. William Shockley and his team continued with this research, and announced the invention of the mechanically solid junction-type transistor in June 1948.

Subsequently, integrated circuits (ICs) were invented that packed large numbers of transistors into a small chip, followed by large-scale integrated circuits (LSIs) with an integration level of 1,000-100,000 components or more on a chip. * The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1955: Japan's first transistor radio is released

Early broadcast radio receivers used vacuum tubes. By replacing vacuum tubes with semiconductor transistor devices, radios became smaller, lighter, and less power consuming. Tokyo Tsushin Kogyo (Tokyo Telecommunications Engineering Corporation; now Sony) released Japan's first transistor radio TR-55 in 1955. While attempting to improve the yield of a transistor manufacturing process, the company's research team discovered the quantum mechanical effect known as tunneling.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1957: Esaki diode is invented

While pursuing a research project for creating faster transistors, Leo Esaki made an artificial structure consisting of two layers of different semiconductor materials separated by a thin dielectric layer of 10 nm or less, and discovered that the electron tunneling effect occurs within this structure.

The first electronic device that applied this effect was the Esaki diode, which became a major springboard for subsequent development of LSIs. * The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1959: Kilby patent is filed

The Kilby patent refers to the patent on the basic concept of the integrated circuit developed by Jack St. Kilby at Texas Instruments (TI). The far-reaching patent yielded huge royalties to TI. Because Japan had originally delayed granting this patent for many years, its expiration date in Japan was as late as in 2001. As a result, Japanese semiconductor manufacturers were charged with large amounts of licensing fees for some of their products.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

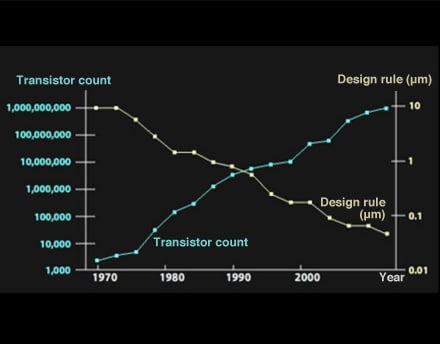

1965: Moore's law is announced

In 1965, Gordon Moore (who later became a co-founder of Intel) predicted that the integration rate of LSIs would double every 18 months, implying that it would quadruple in three years and become 1,000-fold denser in 15 years. The prediction, known as Moore's law, was based on the analysis of historical trends in the computer manufacturing industry. The proposition was widely accepted in the semiconductor and computer industries, and the reality turned out to be almost exactly as Moore had foreseen.

-

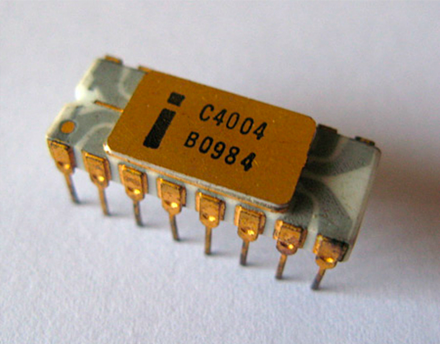

1971: Intel 4004 is released

Intel 4004 was the world's first single chip microprocessor. It was originally developed on the request of Busicom Corporation in Japan as an LSI for its electronic calculator. Intel's Ted Hoff proposed the idea of universal logic processor, which was taken up by Masatoshi Shima at Busicom and Federico Faggin at Intel who completed the chip design. Attracted by the potential versatility of this chip, Intel obtained the sales rights to the product.

* Photo credit: Dentaku Museum

* Photo credit: Dentaku Museum

-

1977: World's first personal computer Apple II is released

Although many consider the Altair 8800 to be the world's first personal computer, its manufacturer called it a minicomputer along with other designations such as microcomputer and home computer. In contrast, Apple's Steve Jobs used the term "personal computer," which took hold with the commercial success of the Apple II.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1980: Flash memory is invented

Flash memory is a rewritable semiconductor memory device that is non-volatile (i.e., it retains the information when power is turned off). Unlike previous rewritable memory that could only erase the information one byte at a time, flash memory was designed to erase the entire block of data at once, and to reduce the cost per bit by 75% or more.

Flash memory was invented in 1980 by Fujio Masuoka (then a Toshiba employee). Today, flash memory is found in personal computers, mobile phones, digital cameras, IC cards, and many other applications that we use every day. * The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1983: Family Computer is released

The advancement in semiconductor technology has revolutionized family entertainment as well. In 1983, Nintendo released a video game console fitted with an 8-bit CPU. The Family Computer (Famicom) became a smash hit on the back of the popularity of Super Mario Bros., a game developed by Nintendo for this platform. Encouraged by Famicom's success, the company released a 16-bit game console known as Super Famicom in 1990.

The video game market thrived as palm-sized mobile platforms and other variations also entered the fray, and the consoles have become more advanced than ever. * The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1991: Carbon nanotube is discovered

The evolution of the semiconductor can no longer be discussed without referring to the advancement in nanotechnology. The discovery of the carbon nanotube by Sumio Iijima in 1991 was a pivotal event in this field.

Carbon nanotubes have many excellent properties, including over 1,000 times greater electric current density and 10 times better room-temperature thermal conductivity than copper. That makes carbon nanotubes a promising successor to silicon as semiconductor scaling continues. * The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1993: First practical blue LED developed

Light-emitting diodes (LEDs) have been considered ideal for making display panels, as they consume very small power to produce light. The application required three primary colors (red, green, and blue), however, and there had been no practical technology to manufacture blue LED until 1993, when Nichia Corporation and Shuji Nakamura developed the blue LED technology. Their achievement made full color LED displays possible, as well as the blue laser technology that enabled advanced DVD recorders and other applications.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

1995: Sharp releases liquid crystal display TV "Window"

In 1982, Epson released the world's first LCD television. It was a digital watch with a 1.2-inch reflective LCD that doubled as a TV. Epson went on to release a pocket color TV with a 1.2-inch transmissive TFT LCD in 1984.

No LCD TV with a reasonably large screen was available until 1995, when Sharp introduced a 10.4-inch TFT model as part of the Window series. * The photo is for illustration purposes only

* The photo is for illustration purposes only

-

2002: Earth Simulator records world's fastest processing speed of 35.86 TFLOPS

The Earth Simulator was a highly parallel vector supercomputer built under the auspices of the then Science and Technology Agency. Designed to study global climate change and the mechanism of strata shifting and diastrophism, the Earth Simulator set the world record performance of 35.86 TFLOPS running the LINPACK benchmark in June 2002. The performance, equivalent to about 36 trillions of floating-point operations per second, was almost five times higher than the runner-up—the IBM ASCI White system. The Earth Simulator maintained the top position on the TOP500 list for about three years.

The defeat was dubbed the Computnik crisis in the U.S., as it was reminiscent of the shocking success of the Soviet satellite Sputnik. Determined to push back, the U.S. government set up a national supercomputer project and regained the top spot in three years. * Screen shot of an article on the TOP500 website

* Screen shot of an article on the TOP500 website

-

2004: Graphene creation experiments prove successful

Graphene, or an atomic-scale sheet of carbon atoms, is attracting attention as a high-performance semiconductor material that could one day replace silicon. Its existence was predicted as early as in the 1940s, but it was much later that graphene was isolated. In 2004, Andre Geim and Konstantin Novoselov applied clear adhesive tape to a block of graphite, cleaving a thin layer of graphene.

Graphene is expected to enable highly efficient solar cells, very sensitive touch panels, and many other useful applications. * The photo is for illustration purposes only

* The photo is for illustration purposes only

-

2007: Apple announces the iPhone

The announcement of iPhone by Steve Jobs on January 9, 2007 completely changed the landscape of the global mobile phone industry. The iPhone was designed to use what he called "the best pointing device in the world"–our fingers. Featuring a 3.5-inch widescreen multi-touch display panel, the device realized diverse functionality and ease of use at the same time.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

2010: Apple announces the iPad

Steve Jobs introduced Apple's fourth innovation after Macintosh, iPod, and iPhone on January 27, 2010. The iPad, according to him, was a multi-touch information device that would straddle between laptops and smartphones. The iPad was central to Jobs' vision of media integration, and became the key to Apple's consumer market strategy.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

November 2011: K computer achieves world's fastest processing speed of 10 PFLOPS

The K computer, Japan's next-generation supercomputer that has been developed under the guidance of the Ministry of Education, Culture, Sports, Science and Technology, recorded the performance of 8.16 PFLOPS running the LINPACK benchmark in June 2011. The feat returned Japan to the top spot on the TOP500 list, years after the Earth Simulator won the honor. In November 2011, the K computer again claimed the top spot with the performance of 10 PFLOPS (10 quadrillion floating-point operations per second), and is still leading the list as of March 2012.

LINPACK is a software library for performing numerical linear algebra on computers. It is used for measuring the performance of supercomputers from around the world and determining the TOP500 ranking. * Screen shot of an article on the TOP500 website

* Screen shot of an article on the TOP500 website

-

2013: Google Glass beta test starts

VR and AR are technologies for making computer-generated environments perceptible to humans just as they perceive real-world information. VR generates purely artificial environments by means of computer graphics, whereas AR overlays computer-generated images on real-world scenes to extend or augment reality. With the proliferation of personal computers and smartphones, VR and AR came to be applied to consumer services in the first decade of the 2000s. Google began beta testing its wearable AR tool Google Glass in 2013, setting in motion the trend for applying AR to wearable computers such as smartphones, smart glasses, and smart watches.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

2015: Ken Sakamura receives ITU 150 Award

Connecting via the Internet various objects embedded with microprocessors and sensors was an idea that has been entertained since as early as the 1980s. Computer scientist Ken Sakamura, one of the pioneers in the field, dubbed this concept "ubiquitous computing," putting forward an open computer system architecture known as TRON. It was much later, around 2014, that the concept came to be popularly known as the Internet of Things (IoT). Today, IoT is expected to significantly improve industrial efficiency and expand the potential of businesses. In 2015, the International Telecommunication Union (ITU) honored Sakamura with its 150th anniversary award.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

-

2016: AlphaGo defeats a human Go master

Artificial intelligence (AI) was first established as a research discipline at a conference in Dartmouth College in 1956. With the introduction of commercial databases in the 1980s, investment in AI research began to gain momentum. As the processing power of computers has dramatically increased over the past years, AI applications capable of deep learning are emerging that can uncover hidden relationships and patterns in big data in real time. In 2016, the AlphaGo AI program developed by DeepMind defeated the human world champion in the game of Go.

* The photo is for illustration purposes only

* The photo is for illustration purposes only

The ever-accelerating evolution of semiconductors

The key inventions that laid the foundation of today's information technology (IT) had already emerged by the end of the 19th century, including the light bulb by Thomas Alva Edison, telephone and telegraph by Alexander Graham Bell, wireless telegraphy by Guglielmo Marconi, and cathode ray tube by Karl Ferdinand Braun. These basic technologies have made remarkable progress since then, due in large part to the development of semiconductors.

Let us trace the evolution of the semiconductor through the history of epoch-making discoveries and inventions, starting with the birth of the vacuum tube—a distant ancestor of semiconductor devices.